Ausführlichere Darstellungen der WIAS-Forschungsthemen finden sich auf der jeweils zugehörigen englischen Seite.

Publikationen

Monografien

Monografien

-

N. Tupitsa, P. Dvurechensky, D. Dvinskikh, A. Gasnikov, Section: Computational Optimal Transport, P.M. Pardalos, O.A. Prokopyev, eds., Encyclopedia of Optimization, Springer International Publishing, Cham, published online on 11.07.2023 pages, (Chapter Published), DOI 10.1007/978-3-030-54621-2_861-1 .

-

A. Gasnikov, D. Dvinskikh, P. Dvurechensky, E. Gorbunov, A. Beznosikov, A. Lobanov, Randomized gradient-free methods in convex optimization, P.M. Pardalos , O.A. Prokopyev, eds., Encyclopedia of Optimization, Springer International Publishing, Cham, published online on 08.09.2023 pages, (Chapter Published), DOI 10.007/978-3-030-54621-2_859-1 .

-

D. Kamzolov, A. Gasnikov, P. Dvurechensky, A. Agafonov, M. Takac, Exploiting Higher-order Derivates in Convex Optimization Methods, Encyclopedia of Optimization, Springer, Cham, 2023, (Chapter Published), DOI 10.1007/978-3-030-54621-2_858-1 .

-

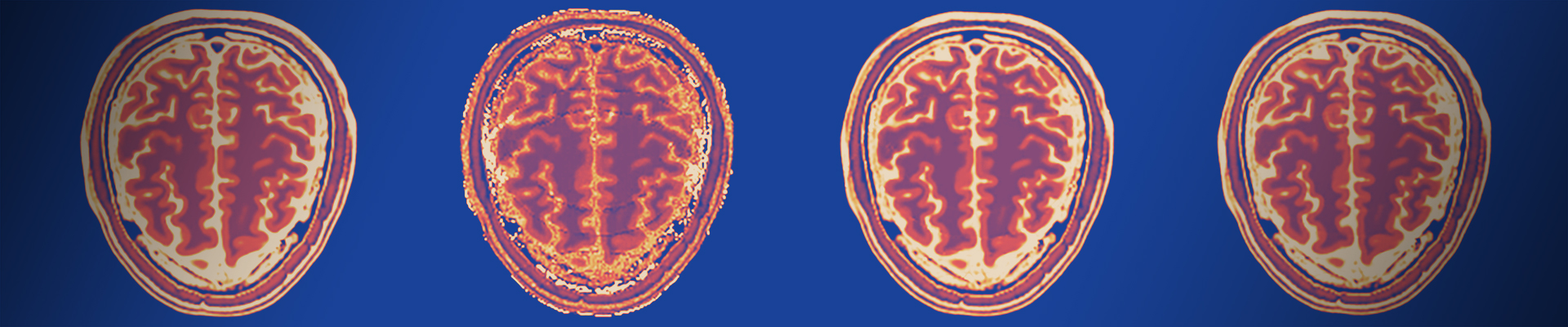

J. Polzehl, K. Tabelow, Magnetic Resonance Brain Imaging: Modeling and Data Analysis using R, 2nd Revised Edition, Series: Use R!, Springer International Publishing, Cham, 2023, 258 pages, (Monograph Published), DOI 10.1007/978-3-031-38949-8 .

Abstract

This book discusses the modeling and analysis of magnetic resonance imaging (MRI) data acquired from the human brain. The data processing pipelines described rely on R. The book is intended for readers from two communities: Statisticians who are interested in neuroimaging and looking for an introduction to the acquired data and typical scientific problems in the field; and neuroimaging students wanting to learn about the statistical modeling and analysis of MRI data. Offering a practical introduction to the field, the book focuses on those problems in data analysis for which implementations within R are available. It also includes fully worked examples and as such serves as a tutorial on MRI analysis with R, from which the readers can derive their own data processing scripts. The book starts with a short introduction to MRI and then examines the process of reading and writing common neuroimaging data formats to and from the R session. The main chapters cover three common MR imaging modalities and their data modeling and analysis problems: functional MRI, diffusion MRI, and Multi-Parameter Mapping. The book concludes with extended appendices providing details of the non-parametric statistics used and the resources for R and MRI data.The book also addresses the issues of reproducibility and topics like data organization and description, as well as open data and open science. It relies solely on a dynamic report generation with knitr and uses neuroimaging data publicly available in data repositories. The PDF was created executing the R code in the chunks and then running LaTeX, which means that almost all figures, numbers, and results were generated while producing the PDF from the sources. -

M. Danilova, P. Dvurechensky, A. Gasnikov, E. Gorbunov, S. Guminov, D. Kamzolov, I. Shibaev, Chapter: Recent Theoretical Advances in Non-convex Optimization, A. Nikeghbali, P.M. Pardalos, A.M. Raigorodskii, M.Th. Rassias, eds., 191 of Springer Optimization and Its Applications, Springer, Cham, 2022, pp. 79--163, (Chapter Published), DOI 10.1007/978-3-031-00832-0_3 .

-

L. Starke, K. Tabelow, Th. Niendorf, A. Pohlmann, Chapter 34: Denoising for Improved Parametric MRI of the Kidney: Protocol for Nonlocal Means Filtering, in: Preclinical MRI of the Kidney: Methods and Protocols, A. Pohlmann, Th. Niendorf, eds., 2216 of Methods in Molecular Biology, Springer Nature Switzerland AG, Cham, 2021, pp. 565--576, (Chapter Published), DOI 10.1007/978-1-0716-0978-1_34 .

-

M. Hintermüller, K. Papafitsoros, Chapter 11: Generating Structured Nonsmooth Priors and Associated Primal-dual Methods, in: Processing, Analyzing and Learning of Images, Shapes, and Forms: Part 2, R. Kimmel, X.-Ch. Tai, eds., 20 of Handbook of Numerical Analysis, Elsevier, 2019, pp. 437--502, (Chapter Published), DOI 10.1016/bs.hna.2019.08.001 .

-

J. Polzehl, K. Tabelow, Magnetic Resonance Brain Imaging: Modeling and Data Analysis using R, Series: Use R!, Springer International Publishing, Cham, 2019, 231 pages, (Monograph Published), DOI 10.1007/978-3-030-29184-6 .

Abstract

This book discusses the modeling and analysis of magnetic resonance imaging (MRI) data acquired from the human brain. The data processing pipelines described rely on R. The book is intended for readers from two communities: Statisticians who are interested in neuroimaging and looking for an introduction to the acquired data and typical scientific problems in the field; and neuroimaging students wanting to learn about the statistical modeling and analysis of MRI data. Offering a practical introduction to the field, the book focuses on those problems in data analysis for which implementations within R are available. It also includes fully worked examples and as such serves as a tutorial on MRI analysis with R, from which the readers can derive their own data processing scripts. The book starts with a short introduction to MRI and then examines the process of reading and writing common neuroimaging data formats to and from the R session. The main chapters cover three common MR imaging modalities and their data modeling and analysis problems: functional MRI, diffusion MRI, and Multi-Parameter Mapping. The book concludes with extended appendices providing details of the non-parametric statistics used and the resources for R and MRI data.The book also addresses the issues of reproducibility and topics like data organization and description, as well as open data and open science. It relies solely on a dynamic report generation with knitr and uses neuroimaging data publicly available in data repositories. The PDF was created executing the R code in the chunks and then running LaTeX, which means that almost all figures, numbers, and results were generated while producing the PDF from the sources. -

J. Polzehl, K. Tabelow, Chapter 4: Structural Adaptive Smoothing: Principles and Applications in Imaging, in: Mathematical Methods for Signal and Image Analysis and Representation, L. Florack, R. Duits, G. Jongbloed, M.-C. VAN Lieshout, L. Davies, eds., 41 of Computational Imaging and Vision, Springer, London et al., 2012, pp. 65--81, (Chapter Published).

-

K. Tabelow, B. Whitcher, eds., Magnetic Resonance Imaging in R, 44 of Journal of Statistical Software, American Statistical Association, 2011, 320 pages, (Monograph Published).

Artikel in Referierten Journalen

Artikel in Referierten Journalen

-

G. Dong, M. Hintermüller, C. Sirotenko, Dictionary learning based regularization in quantitative MRI: A nested alternating optimization framework, Inverse Problems. An International Journal on the Theory and Practice of Inverse Problems, Inverse Methods and Computerized Inversion of Data, 41 (2025), pp. 085007/1--085007/47, DOI 10.1088/1361-6420/adef74 .

Abstract

In this article we propose a novel regularization method for a class of nonlinear inverse problems that is inspired by an application in quantitative magnetic resonance imaging (MRI). It is a special instance of a general dynamical image reconstruction problem with an underlying time discrete physical model. Our regularization strategy is based on dictionary learning, a method that has been proven to be effective in classical MRI. To address the resulting non-convex and non-smooth optimization problem, we alternate between updating the physical parameters of interest via a Levenberg-Marquardt approach and performing several iterations of a dictionary learning algorithm. This process falls under the category of nested alternating optimization schemes. We develop a general such algorithmic framework, integrated with the Levenberg-Marquardt method, of which the convergence theory is not directly available from the literature. Global sub-linear and local strong linear convergence in infinite dimensions under certain regularity conditions for the sub-differentials are investigated based on the Kurdyka?Lojasiewicz inequality. Eventually, numerical experiments demonstrate the practical potential and unresolved challenges of the method. -

E. Gladin, A. Gasnikov, P. Dvurechensky, Accuracy certificates for convex minimization with inexact Oracle, Journal of Optimization Theory and Applications, 204 (2025), pp. 1/1--1/23, DOI 10.1007/s10957-024-02599-9 .

Abstract

Accuracy certificates for convex minimization problems allow for online verification of the accuracy of approximate solutions and provide a theoretically valid online stopping criterion. When solving the Lagrange dual problem, accuracy certificates produce a simple way to recover an approximate primal solution and estimate its accuracy. In this paper, we generalize accuracy certificates for the setting of inexact first-order oracle, including the setting of primal and Lagrange dual pair of problems. We further propose an explicit way to construct accuracy certificates for a large class of cutting plane methods based on polytopes. As a by-product, we show that the considered cutting plane methods can be efficiently used with a noisy oracle even thought they were originally designed to be equipped with an exact oracle. Finally, we illustrate the work of the proposed certificates in the numerical experiments highlighting that our certificates provide a tight upper bound on the objective residual. -

N. Kornilov, M. Alkousa, E. Gorbunov, F. Stonyakin, P. Dvurechensky, A. Gasnikov, Intermediate gradient methods with relative inexactness, Journal of Optimization Theory and Applications, 207 (2025), published online on 22.08.2025, DOI 10.1007/s10957-025-02809-y .

-

D. Pasechniuk, P. Dvurechensky, C.A. Uribe, A. Gasnikov, Decentralised convex optimisation with probability-proportional-to-size quantization, EURO Journal on Computational Optimization, 13 (2025), published online on 22.07.2025, DOI 10.1016/j.ejco.2025.100113 .

-

L. Schmitz, N. Tapia, Free generators and Hoffman's isomorphism for the two-parameter shuffle algebra, Communications in Algebra, (2025), published online: 01.11.2025, DOI 10.1080/00927872.2025.2569445 .

Abstract

Signature transforms have recently been extended to data indexed by two and more parameters. With free Lyndon generators, ideas from B∞-algebras and a novel two-parameter Hoffman exponential, we provide three classes of isomorphisms between the underlying two-parameter shuffle and quasi-shuffle algebras. In particular, we provide a Hopf algebraic connection to the (classical, one-parameter) shuffle algebra over the extended alphabet of connected matrix compositions. -

P. Dvurechensky, Y. Nesterov, Improved global performance guarantees of second-order methods in convex minimization, Foundations of Computational Mathematics. The Journal of the Society for the Foundations of Computational Mathematics, (2025), published online on 13.08.2025, DOI 10.1007/s10208-025-09726-6 .

-

G. David, B. Fricke, J.M. Oeschger, L. Ruthotto, F.J. Fritz, O. Ohana, L. Mordhorst, Th. Sauvigny, P. Freund, K. Tabelow, S. Mohammadi, ACID: A comprehensive toolbox for image processing and modeling of brain, spinal cord, and ex vivo diffusion MRI data, Imaging Neuroscience, 2 (2024), pp. imag_a_00288/1--imag_a_00288/34, DOI 10.1162/imag_a_00288 .

-

G. Dong, M. Flaschel, M. Hintermüller, K. Papafitsoros, C. Sirotenko, K. Tabelow, Data--driven methods for quantitative imaging, GAMM-Mitteilungen, 48 (2025), pp. e202470014/1-- e202470014/35 (published online on 06.11.2024), DOI 10.1002/gamm.202470014 .

Abstract

In the field of quantitative imaging, the image information at a pixel or voxel in an underlying domain entails crucial information about the imaged matter. This is particularly important in medical imaging applications, such as quantitative Magnetic Resonance Imaging (qMRI), where quantitative maps of biophysical parameters can characterize the imaged tissue and thus lead to more accurate diagnoses. Such quantitative values can also be useful in subsequent, automatized classification tasks in order to discriminate normal from abnormal tissue, for instance. The accurate reconstruction of these quantitative maps is typically achieved by solving two coupled inverse problems which involve a (forward) measurement operator, typically ill-posed, and a physical process that links the wanted quantitative parameters to the reconstructed qualitative image, given some underlying measurement data. In this review, by considering qMRI as a prototypical application, we provide a mathematically-oriented overview on how data-driven approaches can be employed in these inverse problems eventually improving the reconstruction of the associated quantitative maps. -

A. Rogozin, A. Beznosikov, D. Dvinskikh, D. Kovalev, P. Dvurechensky, A. Gasnikov, Decentralized saddle point problems via non-Euclidean mirror prox, Optimization Methods & Software, 40 (2025), pp. 1127--1152, DOI 10.1080/10556788.2023.2280062 .

-

P. Dvurechensky, P. Ostroukhov, A. Gasnikov, C.A. Uribe, A. Ivanova, Near-optimal tensor methods for minimizing the gradient norm of convex functions and accelerated primal-dual tensor methods, Optimization Methods & Software, 39 (2024), pp. 1068--1103, DOI 10.1080/10556788.2023.2296443 .

-

P. Dvurechensky, M. Staudigl, Hessian barrier algorithms for non-convex conic optimization, Mathematical Programming. A Publication of the Mathematical Programming Society, 209 (2025), pp. 171--229 (published online on 04.03.2024), DOI 10.1007/s10107-024-02062-7 .

Abstract

We consider the minimization of a continuous function over the intersection of a regular cone with an affine set via a new class of adaptive first- and second-order optimization methods, building on the Hessian-barrier techniques introduced in [Bomze, Mertikopoulos, Schachinger, and Staudigl, Hessian barrier algorithms for linearly constrained optimization problems, SIAM Journal on Optimization, 2019]. Our approach is based on a potential-reduction mechanism and attains a suitably defined class of approximate first- or second-order KKT points with the optimal worst-case iteration complexity O(??2) (first-order) and O(??3/2) (second-order), respectively. A key feature of our methodology is the use of self-concordant barrier functions to construct strictly feasible iterates via a disciplined decomposition approach and without sacrificing on the iteration complexity of the method. To the best of our knowledge, this work is the first which achieves these worst-case complexity bounds under such weak conditions for general conic constrained optimization problems. -

F. Galarce Marín, K. Tabelow, J. Polzehl, Ch.P. Papanikas, V. Vavourakis, L. Lilaj, I. Sack, A. Caiazzo, Displacement and pressure reconstruction from magnetic resonance elastography images: Application to an in silico brain model, SIAM Journal on Imaging Sciences, 16 (2023), pp. 996--1027, DOI 10.1137/22M149363X .

Abstract

This paper investigates a data assimilation approach for non-invasive quantification of intracranial pressure from partial displacement data, acquired through magnetic resonance elastography. Data assimilation is based on a parametrized-background data weak methodology, in which the state of the physical system tissue displacements and pressure fields is reconstructed from partially available data assuming an underlying poroelastic biomechanics model. For this purpose, a physics-informed manifold is built by sampling the space of parameters describing the tissue model close to their physiological ranges, to simulate the corresponding poroelastic problem, and compute a reduced basis. Displacements and pressure reconstruction is sought in a reduced space after solving a minimization problem that encompasses both the structure of the reduced-order model and the available measurements. The proposed pipeline is validated using synthetic data obtained after simulating the poroelastic mechanics on a physiological brain. The numerical experiments demonstrate that the framework can exhibit accurate joint reconstructions of both displacement and pressure fields. The methodology can be formulated for an arbitrary resolution of available displacement data from pertinent images. It can also inherently handle uncertainty on the physical parameters of the mechanical model by enlarging the physics-informed manifold accordingly. Moreover, the framework can be used to characterize, in silico, biomarkers for pathological conditions, by appropriately training the reduced-order model. A first application for the estimation of ventricular pressure as an indicator of abnormal intracranial pressure is shown in this contribution. -

A. Agafonov, D. Kamzolov, P. Dvurechensky, A. Gasnikov, Inexact tensor methods and their application to stochastic convex optimization, Optimization Methods & Software, 39 (2024), pp. 42--83 (published online in Nov. 2023), DOI 10.1080/10556788.2023.2261604 .

-

A. Vasin, A. Gasnikov, P. Dvurechensky, V. Spokoiny, Accelerated gradient methods with absolute and relative noise in the gradient, Optimization Methods & Software, published online in June 2023, DOI 10.1080/10556788.2023.2212503 .

-

E. Borodich, V. Tominin, Y. Tominin, D. Kovalev, A. Gasnikov, P. Dvurechensky, Accelerated variance-reduced methods for saddle-point problems, EURO Journal on Computational Optimization, 10 (2022), pp. 100048/1--100048/32, DOI 10.1016/j.ejco.2022.100048 .

-

A. Ivanova, P. Dvurechensky, E. Vorontsova, D. Pasechnyuk, A. Gasnikov, D. Dvinskikh, A. Tyurin, Oracle complexity separation in convex optimization, Journal of Optimization Theory and Applications, 193 (2022), pp. 462--490, DOI 10.1007/s10957-022-02038-7 .

Abstract

Ubiquitous in machine learning regularized empirical risk minimization problems are often composed of several blocks which can be treated using different types of oracles, e.g., full gradient, stochastic gradient or coordinate derivative. Optimal oracle complexity is known and achievable separately for the full gradient case, the stochastic gradient case, etc. We propose a generic framework to combine optimal algorithms for different types of oracles in order to achieve separate optimal oracle complexity for each block, i.e. for each block the corresponding oracle is called the optimal number of times for a given accuracy. As a particular example, we demonstrate that for a combination of a full gradient oracle and either a stochastic gradient oracle or a coordinate descent oracle our approach leads to the optimal number of oracle calls separately for the full gradient part and the stochastic/coordinate descent part. -

G. Dong, M. Hintermüller, K. Papafitsoros, Optimization with learning-informed differential equation constraints and its applications, ESAIM. Control, Optimisation and Calculus of Variations, 28 (2022), pp. 3/1--3/44, DOI 10.1051/cocv/2021100 .

Abstract

Inspired by applications in optimal control of semilinear elliptic partial differential equations and physics-integrated imaging, differential equation constrained optimization problems with constituents that are only accessible through data-driven techniques are studied. A particular focus is on the analysis and on numerical methods for problems with machine-learned components. For a rather general context, an error analysis is provided, and particular properties resulting from artificial neural network based approximations are addressed. Moreover, for each of the two inspiring applications analytical details are presented and numerical results are provided. -

E. Gorbunov, P. Dvurechensky, A. Gasnikov, An accelerated method for derivative-free smooth stochastic convex optimization, SIAM Journal on Optimization, 32 (2022), pp. 1210--1238, DOI 10.1137/19M1259225 .

Abstract

We consider an unconstrained problem of minimization of a smooth convex function which is only available through noisy observations of its values, the noise consisting of two parts. Similar to stochastic optimization problems, the first part is of a stochastic nature. On the opposite, the second part is an additive noise of an unknown nature, but bounded in the absolute value. In the two-point feedback setting, i.e. when pairs of function values are available, we propose an accelerated derivative-free algorithm together with its complexity analysis. The complexity bound of our derivative-free algorithm is only by a factor of n??? larger than the bound for accelerated gradient-based algorithms, where n is the dimension of the decision variable. We also propose a non-accelerated derivative-free algorithm with a complexity bound similar to the stochastic-gradient-based algorithm, that is, our bound does not have any dimension-dependent factor. Interestingly, if the solution of the problem is sparse, for both our algorithms, we obtain better complexity bound if the algorithm uses a 1-norm proximal setup, rather than the Euclidean proximal setup, which is a standard choice for unconstrained problems. -

S. Mohammadi, T. Streubel, L. Klock, A. Lutti, K. Pine, S. Weber, L. Edwards, P. Scheibe, G. Ziegler, J. Gallinat, S. Kuhn, M. Callaghan, N. Weiskopf, K. Tabelow, Error quantification in multi-parameter mapping facilitates robust estimation and enhanced group level sensitivity, NeuroImage, 262 (2022), pp. 119529/1--119529/14, DOI 10.1016/j.neuroimage.2022.119529 .

Abstract

Multi-Parameter Mapping (MPM) is a comprehensive quantitative neuroimaging protocol that enables estimation of four physical parameters (longitudinal and effective transverse relaxation rates R1 and R2*, proton density PD, and magnetization transfer saturation MTsat) that are sensitive to microstructural tissue properties such as iron and myelin content. Their capability to reveal microstructural brain differences, however, is tightly bound to controlling random noise and artefacts (e.g. caused by head motion) in the signal. Here, we introduced a method to estimate the local error of PD, R1, and MTsat maps that captures both noise and artefacts on a routine basis without requiring additional data. To investigate the method's sensitivity to random noise, we calculated the model-based signal-to-noise ratio (mSNR) and showed in measurements and simulations that it correlated linearly with an experimental raw-image-based SNR map. We found that the mSNR varied with MPM protocols, magnetic field strength (3T vs. 7T) and MPM parameters: it halved from PD to R1 and decreased from PD to MT_sat by a factor of 3-4. Exploring the artefact-sensitivity of the error maps, we generated robust MPM parameters using two successive acquisitions of each contrast and the acquisition-specific errors to down-weight erroneous regions. The resulting robust MPM parameters showed reduced variability at the group level as compared to their single-repeat or averaged counterparts. The error and mSNR maps may better inform power-calculations by accounting for local data quality variations across measurements. Code to compute the mSNR maps and robustly combined MPM maps is available in the open-source hMRI toolbox. -

J.M. Oeschger, K. Tabelow, S. Mohammadi, Axisymmetric diffusion kurtosis imaging with Rician bias correction: A simulation study, Magnetic Resonance in Medicine, 89 (2023), pp. 787--799 (published online on 05.10.2022), DOI 10.1002/mrm.29474 .

-

D. Tiapkin, A. Gasnikov, P. Dvurechensky, Stochastic saddle-point optimization for the Wasserstein barycenter problem, Optimization Letters, 16 (2022), pp. 2145--2175, DOI 10.1007/s11590-021-01834-w .

-

P. Dvurechensky, D. Kamzolov, A. Lukashevich, S. Lee, E. Ordentlich, C.A. Uribe, A. Gasnikov, Hyperfast second-order local solvers for efficient statistically preconditioned distributed optimization, EURO Journal on Computational Optimization, 10 (2022), pp. 100045/1--100045/35, DOI 10.1016/j.ejco.2022.100045 .

-

P. Dvurechensky, K. Safin, S. Shtern, M. Staudigl, Generalized self-concordant analysis of Frank--Wolfe algorithms, Mathematical Programming. A Publication of the Mathematical Programming Society, 198 (2023), pp. 255--323 (published online on 29.01.2022), DOI 10.1007/s10107-022-01771-1 .

Abstract

Projection-free optimization via different variants of the Frank--Wolfe method has become one of the cornerstones of large scale optimization for machine learning and computational statistics. Numerous applications within these fields involve the minimization of functions with self-concordance like properties. Such generalized self-concordant functions do not necessarily feature a Lipschitz continuous gradient, nor are they strongly convex, making them a challenging class of functions for first-order methods. Indeed, in a number of applications, such as inverse covariance estimation or distance-weighted discrimination problems in binary classification, the loss is given by a generalized self-concordant function having potentially unbounded curvature. For such problems projection-free minimization methods have no theoretical convergence guarantee. This paper closes this apparent gap in the literature by developing provably convergent Frank?Wolfe algorithms with standard O(1/k) convergence rate guarantees. Based on these new insights, we show how these sublinearly convergent methods can be accelerated to yield linearly convergent projection-free methods, by either relying on the availability of a local liner minimization oracle, or a suitable modification of the away-step Frank--Wolfe method. -

M. Hintermüller, K. Papafitsoros, C.N. Rautenberg, H. Sun, Dualization and automatic distributed parameter selection of total generalized variation via bilevel optimization, Numerical Functional Analysis and Optimization. An International Journal, 43 (2022), pp. 887--932, DOI 10.1080/01630563.2022.2069812 .

Abstract

Total Generalized Variation (TGV) regularization in image reconstruction relies on an infimal convolution type combination of generalized first- and second-order derivatives. This helps to avoid the staircasing effect of Total Variation (TV) regularization, while still preserving sharp contrasts in images. The associated regularization effect crucially hinges on two parameters whose proper adjustment represents a challenging task. In this work, a bilevel optimization framework with a suitable statistics-based upper level objective is proposed in order to automatically select these parameters. The framework allows for spatially varying parameters, thus enabling better recovery in high-detail image areas. A rigorous dualization framework is established, and for the numerical solution, two Newton type methods for the solution of the lower level problem, i.e. the image reconstruction problem, and two bilevel TGV algorithms are introduced, respectively. Denoising tests confirm that automatically selected distributed regularization parameters lead in general to improved reconstructions when compared to results for scalar parameters. -

A. Gasnikov, D. Dvinskikh, P. Dvurechensky, D. Kamzolov, V. Matyukhin, D. Pasechnyuk, N. Tupitsa, A. Chernov, Accelerated meta-algorithm for convex optimization, Computational Mathematics and Mathematical Physics, 61 (2021), pp. 17--28, DOI 10.1134/S096554252101005X .

-

F. Stonyakin, A. Tyurin, A. Gasnikov, P. Dvurechensky, A. Agafonov, D. Dvinskikh, M. Alkousa, D. Pasechnyuk, S. Artamonov, V. Piskunova, Inexact model: A framework for optimization and variational inequalities, Optimization Methods & Software, published online in July 2021, DOI 10.1080/10556788.2021.1924714 .

Abstract

In this paper we propose a general algorithmic framework for first-order methods in optimization in a broad sense, including minimization problems, saddle-point problems and variational inequalities. This framework allows to obtain many known methods as a special case, the list including accelerated gradient method, composite optimization methods, level-set methods, proximal methods. The idea of the framework is based on constructing an inexact model of the main problem component, i.e. objective function in optimization or operator in variational inequalities. Besides reproducing known results, our framework allows to construct new methods, which we illustrate by constructing a universal method for variational inequalities with composite structure. This method works for smooth and non-smooth problems with optimal complexity without a priori knowledge of the problem smoothness. We also generalize our framework for strongly convex objectives and strongly monotone variational inequalities. -

N. Tupitsa, P. Dvurechensky, A. Gasnikov, S. Guminov, Alternating minimization methods for strongly convex optimization, Journal of Inverse and Ill-Posed Problems, 29 (2021), pp. 721--739, DOI 10.1515/jiip-2020-0074 .

Abstract

We consider alternating minimization procedures for convex optimization problems with variable divided in many block, each block being amenable for minimization with respect to its variable with freezed other variables blocks. In the case of two blocks, we prove a linear convergence rate for alternating minimization procedure under Polyak-Łojasiewicz condition, which can be seen as a relaxation of the strong convexity assumption. Under strong convexity assumption in many-blocks setting we provide an accelerated alternating minimization procedure with linear rate depending on the square root of the condition number as opposed to condition number for the non-accelerated method. -

P. Dvurechensky, M. Staudigl, S. Shtern, First-order methods for convex optimization, EURO Journal on Computational Optimization, 9 (2021), pp. 100015/1--100015/27, DOI 10.1016/j.ejco.2021.100015 .

Abstract

First-order methods for solving convex optimization problems have been at the forefront of mathematical optimization in the last 20 years. The rapid development of this important class of algorithms is motivated by the success stories reported in various applications, including most importantly machine learning, signal processing, imaging and control theory. First-order methods have the potential to provide low accuracy solutions at low computational complexity which makes them an attractive set of tools in large-scale optimization problems. In this survey we cover a number of key developments in gradient-based optimization methods. This includes non-Euclidean extensions of the classical proximal gradient method, and its accelerated versions. Additionally we survey recent developments within the class of projection-free methods, and proximal versions of primal-dual schemes. We give complete proofs for various key results, and highlight the unifying aspects of several optimization algorithms. -

Y.Y. Park, J. Polzehl, S. Chatterjee, A. Brechmann, M. Fiecas, Semiparametric modeling of time-varying activation and connectivity in task-based fMRI data, Computational Statistics & Data Analysis, 150 (2020), pp. 107006/1--107006/14, DOI 10.1016/j.csda.2020.107006 .

Abstract

In functional magnetic resonance imaging (fMRI), there is a rise in evidence that time-varying functional connectivity, or dynamic functional connectivity (dFC), which measures changes in the synchronization of brain activity, provides additional information on brain networks not captured by time-invariant (i.e., static) functional connectivity. While there have been many developments for statistical models of dFC in resting-state fMRI, there remains a gap in the literature on how to simultaneously model both dFC and time-varying activation when the study participants are undergoing experimental tasks designed to probe at a cognitive process of interest. A method is proposed to estimate dFC between two regions of interest (ROIs) in task-based fMRI where the activation effects are also allowed to vary over time. The proposed method, called TVAAC (time-varying activation and connectivity), uses penalized splines to model both time-varying activation effects and time-varying functional connectivity and uses the bootstrap for statistical inference. Simulation studies show that TVAAC can estimate both static and time-varying activation and functional connectivity, while ignoring time-varying activation effects would lead to poor estimation of dFC. An empirical illustration is provided by applying TVAAC to analyze two subjects from an event-related fMRI learning experiment. -

J. Polzehl, K. Papafitsoros, K. Tabelow, Patch-wise adaptive weights smoothing in R, Journal of Statistical Software, 95 (2020), pp. 1--27, DOI 10.18637/jss.v095.i06 .

Abstract

Image reconstruction from noisy data has a long history of methodological development and is based on a variety of ideas. In this paper we introduce a new method called patch-wise adaptive smoothing, that extends the Propagation-Separation approach by using comparisons of local patches of image intensities to define local adaptive weighting schemes for an improved balance of reduced variability and bias in the reconstruction result. We present the implementation of the new method in an R package aws and demonstrate its properties on a number of examples in comparison with other state-of-the art image reconstruction methods. -

E.A. Vorontsova, A. Gasnikov, E.A. Gorbunov, P. Dvurechensky, Accelerated gradient-free optimization methods with a non-Euclidean proximal operator, Automation and Remote Control, 80 (2019), pp. 1487--1501.

-

L. Calatroni, K. Papafitsoros, Analysis and automatic parameter selection of a variational model for mixed Gaussian and salt & pepper noise removal, Inverse Problems and Imaging, 35 (2019), pp. 114001/1--114001/37, DOI 10.1088/1361-6420/ab291a .

Abstract

We analyse a variational regularisation problem for mixed noise removal that was recently proposed in [14]. The data discrepancy term of the model combines L1 and L2 terms in an infimal convolution fashion and it is appropriate for the joint removal of Gaussian and Salt & Pepper noise. In this work we perform a finer analysis of the model which emphasises on the balancing effect of the two parameters appearing in the discrepancy term. Namely, we study the asymptotic behaviour of the model for large and small values of these parameters and we compare it to the corresponding variational models with L1 and L2 data fidelity. Furthermore, we compute exact solutions for simple data functions taking the total variation as regulariser. Using these theoretical results, we then analytically study a bilevel optimisation strategy for automatically selecting the parameters of the model by means of a training set. Finally, we report some numerical results on the selection of the optimal noise model via such strategy which confirm the validity of our analysis and the use of popular data models in the case of "blind” model selection. -

A. Gasnikov, P. Dvurechensky, F. Stonyakin, A.A. Titov, An adaptive proximal method for variational inequalities, Computational Mathematics and Mathematical Physics, 59 (2019), pp. 836--841.

-

S. Guminov, Y. Nesterov, P. Dvurechensky, A. Gasnikov, Accelerated primal-dual gradient descent with linesearch for convex, nonconvex, and nonsmooth optimization problems, Doklady Mathematics. Maik Nauka/Interperiodica Publishing, Moscow. English. Translation of the Mathematics Section of: Doklady Akademii Nauk. (Formerly: Russian Academy of Sciences. Doklady. Mathematics)., 99 (2019), pp. 125--128.

-

K. Tabelow, E. Balteau, J. Ashburner, M.F. Callaghan, B. Draganski, G. Helms, F. Kherif, T. Leutritz, A. Lutti, Ch. Phillips, E. Reimer, L. Ruthotto, M. Seif, N. Weiskopf, G. Ziegler, S. Mohammadi, hMRI -- A toolbox for quantitative MRI in neuroscience and clinical research, NeuroImage, 194 (2019), pp. 191--210, DOI 10.1016/j.neuroimage.2019.01.029 .

Abstract

Quantitative magnetic resonance imaging (qMRI) finds increasing application in neuroscience and clinical research due to its sensitivity to micro-structural properties of brain tissue, e.g. axon, myelin, iron and water concentration. We introduce the hMRI--toolbox, an easy-to-use open-source tool for handling and processing of qMRI data presented together with an example dataset. This toolbox allows the estimation of high-quality multi-parameter qMRI maps (longitudinal and effective transverse relaxation rates R1 and R2*, proton density PD and magnetisation transfer MT) that can be used for calculation of standard and novel MRI biomarkers of tissue microstructure as well as improved delineation of subcortical brain structures. Embedded in the Statistical Parametric Mapping (SPM) framework, it can be readily combined with existing SPM tools for estimating diffusion MRI parameter maps and benefits from the extensive range of available tools for high-accuracy spatial registration and statistical inference. As such the hMRI--toolbox provides an efficient, robust and simple framework for using qMRI data in neuroscience and clinical research. -

M. Hintermüller, K. Papafitsoros, C.N. Rautenberg, Analytical aspects of spatially adapted total variation regularisation, Journal of Mathematical Analysis and Applications, 454 (2017), pp. 891--935, DOI 10.1016/j.jmaa.2017.05.025 .

Abstract

In this paper we study the structure of solutions of the one dimensional weighted total variation regularisation problem, motivated by its application in signal recovery tasks. We study in depth the relationship between the weight function and the creation of new discontinuities in the solution. A partial semigroup property relating the weight function and the solution is shown and analytic solutions for simply data functions are computed. We prove that the weighted total variation minimisation problem is well-posed even in the case of vanishing weight function, despite the lack of coercivity. This is based on the fact that the total variation of the solution is bounded by the total variation of the data, a result that it also shown here. Finally the relationship to the corresponding weighted fidelity problem is explored, showing that the two problems can produce completely different solutions even for very simple data functions. -

M. Hintermüller, C.N. Rautenberg, S. Rösel, Density of convex intersections and applications, Proceedings of the Royal Society of Edinburgh. Section A. Mathematics, 473 (2017), pp. 20160919/1--20160919/28, DOI 10.1098/rspa.2016.0919 .

Abstract

In this paper we address density properties of intersections of convex sets in several function spaces. Using the concept of Gamma-convergence, it is shown in a general framework, how these density issues naturally arise from the regularization, discretization or dualization of constrained optimization problems and from perturbed variational inequalities. A variety of density results (and counterexamples) for pointwise constraints in Sobolev spaces are presented and the corresponding regularity requirements on the upper bound are identified. The results are further discussed in the context of finite element discretizations of sets associated to convex constraints. Finally, two applications are provided, which include elasto-plasticity and image restoration problems. -

M. Hintermüller, C.N. Rautenberg, T. Wu, A. Langer, Optimal selection of the regularization function in a generalized total variation model. Part II: Algorithm, its analysis and numerical tests, Journal of Mathematical Imaging and Vision, 59 (2017), pp. 515--533.

Abstract

Based on the generalized total variation model and its analysis pursued in part I (WIAS Preprint no. 2235), in this paper a continuous, i.e., infinite dimensional, projected gradient algorithm and its convergence analysis are presented. The method computes a stationary point of a regularized bilevel optimization problem for simultaneously recovering the image as well as determining a spatially distributed regularization weight. Further, its numerical realization is discussed and results obtained for image denoising and deblurring as well as Fourier and wavelet inpainting are reported on. -

M. Hintermüller, C.N. Rautenberg, Optimal selection of the regularization function in a weighted total variation model. Part I: Modeling and theory, Journal of Mathematical Imaging and Vision, 59 (2017), pp. 498--514.

Abstract

Based on the generalized total variation model and its analysis pursued in part I (WIAS Preprint no. 2235), in this paper a continuous, i.e., infinite dimensional, projected gradient algorithm and its convergence analysis are presented. The method computes a stationary point of a regularized bilevel optimization problem for simultaneously recovering the image as well as determining a spatially distributed regularization weight. Further, its numerical realization is discussed and results obtained for image denoising and deblurring as well as Fourier and wavelet inpainting are reported on. -

M. Deppe, K. Tabelow, J. Krämer, J.-G. Tenberge, P. Schiffler, S. Bittner, W. Schwindt, F. Zipp, H. Wiendl, S.G. Meuth, Evidence for early, non-lesional cerebellar damage in patients with multiple sclerosis: DTI measures correlate with disability, atrophy, and disease duration, Multiple Sclerosis Journal, 22 (2016), pp. 73--84, DOI 10.1177/1352458515579439 .

-

K. Schildknecht, K. Tabelow, Th. Dickhaus, More specific signal detection in functional magnetic resonance imaging by false discovery rate control for hierarchically structured systems of hypotheses, PLOS ONE, 11 (2016), pp. e0149016/1--e0149016/21, DOI 10.1371/journal.pone.0149016 .

-

H.U. Voss, J.P. Dyke, K. Tabelow, N. Schiff, D. Ballon, Magnetic resonance advection imaging of cerebrovascular pulse dynamics, Journal of Cerebral Blood Flow and Metabolism, 37 (2017), pp. 1223--1235 (published online on 24.05.2016), DOI 10.1177/0271678x16651449 .

-

M. Deliano, K. Tabelow, R. König, J. Polzehl, Improving accuracy and temporal resolution of learning curve estimation for within- and across-session analysis, PLOS ONE, 11 (2016), pp. e0157355/1--e0157355/23, DOI 10.1371/journal.pone.0157355 .

Abstract

Estimation of learning curves is ubiquitously based on proportions of correct responses within moving trial windows. In this approach, it is tacitly assumed that learning performance is constant within the moving windows, which, however, is often not the case. In the present study we demonstrate that violations of this assumption lead to systematic errors in the analysis of learning curves, and we explored the dependency of these errors on window size, different statistical models, and learning phase. To reduce these errors for single subjects as well as on the population level, we propose adequate statistical methods for the estimation of learning curves and the construction of confidence intervals, trial by trial. Applied to data from a shuttle-box avoidance experiment with Mongolian gerbils, our approach revealed performance changes occurring at multiple temporal scales within and across training sessions which were otherwise obscured in the conventional analysis. The proper assessment of the behavioral dynamics of learning at a high temporal resolution clarified and extended current descriptions of the process of avoidance learning. It further disambiguated the interpretation of neurophysiological signal changes recorded during training in relation to learning. -

J. Polzehl, K. Tabelow, Low SNR in diffusion MRI models, Journal of the American Statistical Association, 111 (2016), pp. 1480--1490, DOI 10.1080/01621459.2016.1222284 .

Abstract

Noise is a common issue for all magnetic resonance imaging (MRI) techniques such as diffusion MRI and obviously leads to variability of the estimates in any model describing the data. Increasing spatial resolution in MR experiments further diminish the signal-to-noise ratio (SNR). However, with low SNR the expected signal deviates from the true value. Common modeling approaches therefore lead to a bias in estimated model parameters. Adjustments require an analysis of the data generating process and a characterization of the resulting distribution of the imaging data. We provide an adequate quasi-likelihood approach that employs these characteristics. We elaborate on the effects of typical data preprocessing and analyze the bias effects related to low SNR for the example of the diffusion tensor model in diffusion MRI. We then demonstrate the relevance of the problem using data from the Human Connectome Project. -

K. Tabelow, S. Mohammadi, N. Weiskopf, J. Polzehl, POAS4SPM --- A toolbox for SPM to denoise diffusion MRI data, Neuroinformatics, 13 (2015), pp. 19--29.

Abstract

We present an implementation of a recently developed noise reduction algorithm for dMRI data, called multi-shell position orientation adaptive smoothing (msPOAS), as a toolbox for SPM. The method intrinsically adapts to the structures of different size and shape in dMRI and hence avoids blurring typically observed in non-adaptive smoothing. We give examples for the usage of the toolbox and explain the determination of experiment-dependent parameters for an optimal performance of msPOAS. -

K. Tabelow, H.U. Voss, J. Polzehl, Local estimation of the noise level in MRI using structural adaptation, Medical Image Analysis, 20 (2015), pp. 76--86.

Abstract

We present a method for local estimation of the signal-dependent noise level in magnetic resonance images. The procedure uses a multi-scale approach to adaptively infer on local neighborhoods with similar data distribution. It exploits a maximum-likelihood estimator for the local noise level. The validity of the method was evaluated on repeated diffusion data of a phantom and simulated data using T1-data corrupted with artificial noise. Simulation results are compared with a recently proposed estimate. The method was applied to a high-resolution diffusion dataset to obtain improved diffusion model estimation results and to demonstrate its usefulness in methods for enhancing diffusion data. -

J. Krämer, M. Deppe, K. Göbel, K. Tabelow, H. Wiendl, S.G. Meuth, Recovery of thalamic microstructural damage after Shiga toxin 2-associated hemolytic-uremic syndrome, Journal of the Neurological Sciences, 356 (2015), pp. 175--183.

-

S. Mohammadi, K. Tabelow, L. Ruthotto, Th. Feiweier, J. Polzehl, N. Weiskopf, High-resolution diffusion kurtosis imaging at 3T enabled by advanced post-processing, Frontiers in Neuroscience, 8 (2015), pp. 427/1--427/14.

-

S. Becker, K. Tabelow, S. Mohammadi, N. Weiskopf, J. Polzehl, Adaptive smoothing of multi-shell diffusion-weighted magnetic resonance data by msPOAS, NeuroImage, 95 (2014), pp. 90--105.

Abstract

In this article we present a noise reduction method (msPOAS) for multi-shell diffusion-weighted magnetic resonance data. To our knowledge, this is the first smoothing method which allows simultaneous smoothing of all q-shells. It is applied directly to the diffusion weighted data and consequently allows subsequent analysis by any model. Due to its adaptivity, the procedure avoids blurring of the inherent structures and preserves discontinuities. MsPOAS extends the recently developed position-orientation adaptive smoothing (POAS) procedure to multi-shell experiments. At the same time it considerably simplifies and accelerates the calculations. The behavior of the algorithm msPOAS is evaluated on diffusion-weighted data measured on a single shell and on multiple shells. -

M. Welvaert, K. Tabelow, R. Seurinck, Y. Rosseel, Adaptive smoothing as inference strategy: More specificity for unequally sized or neighboring regions, Neuroinformatics, 11 (2013), pp. 435--445.

Abstract

Although spatial smoothing of fMRI data can serve multiple purposes, increasing the sensitivity of activation detection is probably its greatest benefit. However, this increased detection power comes with a loss of specificity when non-adaptive smoothing (i.e. the standard in most software packages) is used. Simulation studies and analysis of experimental data was performed using the R packages neuRosim and fmri. In these studies, we systematically investigated the effect of spatial smoothing on the power and number of false positives in two particular cases that are often encountered in fMRI research: (1) Single condition activation detection for regions that differ in size, and (2) multiple condition activation detection for neighbouring regions. Our results demonstrate that adaptive smoothing is superior in both cases because less false positives are introduced by the spatial smoothing process compared to standard Gaussian smoothing or FDR inference of unsmoothed data. -

S. Becker, K. Tabelow, H.U. Voss, A. Anwander, R.M. Heidemann, J. Polzehl, Position-orientation adaptive smoothing of diffusion weighted magnetic resonance data (POAS), Medical Image Analysis, 16 (2012), pp. 1142--1155.

Abstract

We introduce an algorithm for diffusion weighted magnetic resonance imaging data enhancement based on structural adaptive smoothing in both space and diffusion direction. The method, called POAS, does not refer to a specific model for the data, like the diffusion tensor or higher order models. It works by embedding the measurement space into a space with defined metric and group operations, in this case the Lie group of three-dimensional Euclidean motion SE(3). Subsequently, pairwise comparisons of the values of the diffusion weighted signal are used for adaptation. The position-orientation adaptive smoothing preserves the edges of the observed fine and anisotropic structures. The POAS-algorithm is designed to reduce noise directly in the diffusion weighted images and consequently also to reduce bias and variability of quantities derived from the data for specific models. We evaluate the algorithm on simulated and experimental data and demonstrate that it can be used to reduce the number of applied diffusion gradients and hence acquisition time while achieving similar quality of data, or to improve the quality of data acquired in a clinically feasible scan time setting. -

K. Tabelow, H.U. Voss, J. Polzehl, Modeling the orientation distribution function by mixtures of angular central Gaussian distributions, Journal of Neuroscience Methods, 203 (2012), pp. 200--211.

Abstract

In this paper we develop a tensor mixture model for diffusion weighted imaging data using an automatic model selection criterion for the order of tensor components in a voxel. We show that the weighted orientation distribution function for this model can be expanded into a mixture of angular central Gaussian distributions. We show properties of this model in extensive simulations and in a high angular resolution experimental data set. The results suggest that the model may improve imaging of cerebral fiber tracts. We demonstrate how inference on canonical model parameters may give rise to new clinical applications. -

K. Tabelow, J.D. Clayden, P. Lafaye DE Micheaux, J. Polzehl, V.J. Schmid, B. Whitcher, Image analysis and statistical inference in neuroimaging with R, NeuroImage, 55 (2011), pp. 1686--1693.

Abstract

R is a language and environment for statistical computing and graphics. It can be considered an alternative implementation of the S language developed in the 1970s and 1980s for data analysis and graphics (Becker and Chambers, 1984; Becker et al., 1988). The R language is part of the GNU project and offers versions that compile and run on almost every major operating system currently available. We highlight several R packages built specifically for the analysis of neuroimaging data in the context of functional MRI, diffusion tensor imaging, and dynamic contrast-enhanced MRI. We review their methodology and give an overview of their capabilities for neuroimaging. In addition we summarize some of the current activities in the area of neuroimaging software development in R. -

K. Tabelow, J. Polzehl, Statistical parametric maps for functional MRI experiments in R: The package fmri, Journal of Statistical Software, 44 (2011), pp. 1--21.

Abstract

The package fmri is provided for analysis of single run functional Magnetic Resonance Imaging data. It implements structural adaptive smoothing methods with signal detection for adaptive noise reduction which avoids blurring of edges of activation areas. fmri provides fmri analysis from time series modeling to signal detection and publication-ready images. -

J. Bardin, J. Fins, D. Katz, J. Hersh, L. Heier, K. Tabelow, J. Dyke, D. Ballon, N. Schiff, H. Voss, Dissociations between behavioral and fMRI-based evaluations of cognitive function after brain injury, Brain, 134 (2011), pp. 769--782.

Abstract

Functional neuroimaging methods hold promise for the identification of cognitive function and communication capacity in some severely brain-injured patients who may not retain sufficient motor function to demonstrate their abilities. We studied seven severely brain-injured patients and a control group of 14 subjects using a novel hierarchical functional magnetic resonance imaging assessment utilizing mental imagery responses. Whereas the control group showed consistent and accurate (for communication) blood-oxygen-level-dependent responses without exception, the brain-injured subjects showed a wide variation in the correlation of blood-oxygen-level-dependent responses and overt behavioural responses. Specifically, the brain-injured subjects dissociated bedside and functional magnetic resonance imaging-based command following and communication capabilities. These observations reveal significant challenges in developing validated functional magnetic resonance imaging-based methods for clinical use and raise interesting questions about underlying brain function assayed using these methods in brain-injured subjects. -

J. Polzehl, K. Tabelow, Beyond the Gaussian model in diffussion-weighted imaging: The package dti, Journal of Statistical Software, 44 (2011), pp. 1--26.

Abstract

Diffusion weighted imaging is a magnetic resonance based method to investigate tissue micro-structure especially in the human brain via water diffusion. Since the standard diffusion tensor model for the acquired data failes in large portion of the brain voxel more sophisticated models have bee developed. Here, we report on the package dti and how some of these models can be used with the package. -

E. Diederichs, A. Juditsky, V. Spokoiny, Ch. Schütte, Sparse non-Gaussian component analysis, IEEE Transactions on Information Theory, 56 (2010), pp. 3033--3047.

-

J. Polzehl, H.U. Voss, K. Tabelow, Structural adaptive segmentation for statistical parametric mapping, NeuroImage, 52 (2010), pp. 515--523.

Abstract

Functional Magnetic Resonance Imaging inherently involves noisy measurements and a severe multiple test problem. Smoothing is usually used to reduce the effective number of multiple comparisons and to locally integrate the signal and hence increase the signal-to-noise ratio. Here, we provide a new structural adaptive segmentation algorithm (AS) that naturally combines the signal detection with noise reduction in one procedure. Moreover, the new method is closely related to a recently proposed structural adaptive smoothing algorithm and preserves shape and spatial extent of activation areas without blurring the borders. -

K. Tabelow, V. Piëch, J. Polzehl, H.U. Voss, High-resolution fMRI: Overcoming the signal-to-noise problem, Journal of Neuroscience Methods, 178 (2009), pp. 357--365.

Abstract

Increasing the spatial resolution in functional Magnetic Resonance Imaging (fMRI) inherently lowers the signal-to-noise ratio (SNR). In order to still detect functionally significant activations in high-resolution images, spatial smoothing of the data is required. However, conventional non-adaptive smoothing comes with a reduced effective resolution, foiling the benefit of the higher acquisition resolution. We show how our recently proposed structural adaptive smoothing procedure for functional MRI data can improve signal detection of high-resolution fMRI experiments regardless of the lower SNR. The procedure is evaluated on human visual and sensory-motor mapping experiments. In these applications, the higher resolution could be fully utilized and high-resolution experiments were outperforming normal resolution experiments by means of both statistical significance and information content. -

J. Polzehl, K. Tabelow, Structural adaptive smoothing in diffusion tensor imaging: The R package dti, Journal of Statistical Software, 31 (2009), pp. 1--24.

Abstract

Diffusion Weighted Imaging has become and will certainly continue to be an important tool in medical research and diagnostics. Data obtained with Diffusion Weighted Imaging are characterized by a high noise level. Thus, estimation of quantities like anisotropy indices or the main diffusion direction may be significantly compromised by noise in clinical or neuroscience applications. Here, we present a new package dti for R, which provides functions for the analysis of diffusion weighted data within the diffusion tensor model. This includes smoothing by a recently proposed structural adaptive smoothing procedure based on the Propagation-Separation approach in the context of the widely used Diffusion Tensor Model. We extend the procedure and show, how a correction for Rician bias can be incorporated. We use a heteroscedastic nonlinear regression model to estimate the diffusion tensor. The smoothing procedure naturally adapts to different structures of different size and thus avoids oversmoothing edges and fine structures. We illustrate the usage and capabilities of the package through some examples. -

K. Tabelow, J. Polzehl, A.M. Uluğ, J.P. Dyke, R. Watts, L.A. Heier, H.U. Voss, Accurate localization of brain activity in presurgical fMRI by structure adaptive smoothing, IEEE Transactions on Medical Imaging, 27 (2008), pp. 531--537.

Abstract

An important problem of the analysis of fMRI experiments is to achieve some noise reduction of the data without blurring the shape of the activation areas. As a novel solution to this problem, the Propagation-Separation approach (PS), a structure adaptive smoothing method, has been proposed recently. PS adapts to different shapes of activation areas by generating a spatial structure corresponding to similarities and differences between time series in adjacent locations. In this paper we demonstrate how this method results in more accurate localization of brain activity. First, it is shown in numerical simulations that PS is superior over Gaussian smoothing with respect to the accurate description of the shape of activation clusters and and results in less false detections. Second, in a study of 37 presurgical planning cases we found that PS and Gaussian smoothing often yield different results, and we present examples showing aspects of the superiority of PS as applied to presurgical planning. -

K. Tabelow, J. Polzehl, V. Spokoiny, H.U. Voss, Diffusion tensor imaging: Structural adaptive smoothing, NeuroImage, 39 (2008), pp. 1763--1773.

Abstract

Diffusion Tensor Imaging (DTI) data is characterized by a high noise level. Thus, estimation errors of quantities like anisotropy indices or the main diffusion direction used for fiber tracking are relatively large and may significantly confound the accuracy of DTI in clinical or neuroscience applications. Besides pulse sequence optimization, noise reduction by smoothing the data can be pursued as a complementary approach to increase the accuracy of DTI. Here, we suggest an anisotropic structural adaptive smoothing procedure, which is based on the Propagation-Separation method and preserves the structures seen in DTI and their different sizes and shapes. It is applied to artificial phantom data and a brain scan. We show that this method significantly improves the quality of the estimate of the diffusion tensor and hence enables one either to reduce the number of scans or to enhance the input for subsequent analysis such as fiber tracking. -

D. Divine, J. Polzehl, F. Godtliebsen, A propagation-separation approach to estimate the autocorrelation in a time-series, Nonlinear Processes in Geophysics, 15 (2008), pp. 591--599.

-

V. Katkovnik, V. Spokoiny, Spatially adaptive estimation via fitted local likelihood techniques, IEEE Transactions on Signal Processing, 56 (2008), pp. 873--886.

Abstract

This paper offers a new technique for spatially adaptive estimation. The local likelihood is exploited for nonparametric modelling of observations and estimated signals. The approach is based on the assumption of a local homogeneity of the signal: for every point there exists a neighborhood in which the signal can be well approximated by a constant. The fitted local likelihood statistics is used for selection of an adaptive size of this neighborhood. The algorithm is developed for quite a general class of observations subject to the exponential distribution. The estimated signal can be uni- and multivariable. We demonstrate a good performance of the new algorithm for Poissonian image denoising and compare of the new method versus the intersection of confidence interval (ICI) technique that also exploits a selection of an adaptive neighborhood for estimation. -

O. Minet, H. Gajewski, J.A. Griepentrog, J. Beuthan, The analysis of laser light scattering during rheumatoid arthritis by image segmentation, Laser Physics Letters, 4 (2007), pp. 604--610.

-

H.U. Voss, K. Tabelow, J. Polzehl, O. Tchernichovski, K. Maul, D. Salgado-Commissariat, D. Ballon, S.A. Helekar, Functional MRI of the zebra finch brain during song stimulation suggests a lateralized response topography, Proceedings of the National Academy of Sciences of the United States of America, 104 (2007), pp. 10667--10672.

Abstract

Electrophysiological and activity-dependent gene expression studies of birdsong have contributed to the understanding of the neural representation of natural sounds. However, we have limited knowledge about the overall spatial topography of song representation in the avian brain. Here, we adapt the noninvasive functional MRI method in mildly sedated zebra finches (Taeniopygia guttata) to localize and characterize song driven brain activation. Based on the blood oxygenation level-dependent signal, we observed a differential topographic responsiveness to playback of bird's own song, tutor song, conspecific song, and a pure tone as a nonsong stimulus. The bird's own song caused a stronger response than the tutor song or tone in higher auditory areas. This effect was more pronounced in the medial parts of the forebrain. We found left-right hemispheric asymmetry in sensory responses to songs, with significant discrimination between stimuli observed only in the right hemisphere. This finding suggests that perceptual responses might be lateralized in zebra finches. In addition to establishing the feasibility of functional MRI in sedated songbirds, our results demonstrate spatial coding of song in the zebra finch forebrain, based on developmental familiarity and experience. -

J. Polzehl, K. Tabelow, Adaptive smoothing of digital images: The R package adimpro, Journal of Statistical Software, 19 (2007), pp. 1--17.

Abstract

Digital imaging has become omnipresent in the past years with a bulk of applications ranging from medical imaging to photography. When pushing the limits of resolution and sensitivity noise has ever been a major issue. However, commonly used non-adaptive filters can do noise reduction at the cost of a reduced effective spatial resolution only. Here we present a new package adimpro for R, which implements the Propagation-Separation approach by Polzehl and Spokoiny (2006) for smoothing digital images. This method naturally adapts to different structures of different size in the image and thus avoids oversmoothing edges and fine structures. We extend the method for imaging data with spatial correlation. Furthermore we show how the estimation of the dependence between variance and mean value can be included. We illustrate the use of the package through some examples. -

J. Polzehl, K. Tabelow, fmri: A package for analyzing fmri data, Newsletter of the R Project for Statistical Computing, 7 (2007), pp. 13--17.

-

K. Tabelow, J. Polzehl, H.U. Voss, V. Spokoiny, Analyzing fMRI experiments with structural adaptive smoothing procedures, NeuroImage, 33 (2006), pp. 55--62.

Abstract

Data from functional magnetic resonance imaging (fMRI) consists of time series of brain images which are characterized by a low signal-to-noise ratio. In order to reduce noise and to improve signal detection the fMRI data is spatially smoothed. However, the common application of a Gaussian filter does this at the cost of loss of information on spatial extent and shape of the activation area. We suggest to use the propagation-separation procedures introduced by Polzehl and Spokoiny (2006) instead. We show that this significantly improves the information on the spatial extent and shape of the activation region with similar results for the noise reduction. To complete the statistical analysis, signal detection is based on thresholds defined by random field theory. Effects of ad aptive and non-adaptive smoothing are illustrated by artificial examples and an analysis of experimental data. -

G. Blanchard, M. Kawanabe, M. Sugiyama, V. Spokoiny, K.-R. Müller, In search of non-Gaussian components of a high-dimensional distribution, Journal of Machine Learning Research (JMLR). MIT Press, Cambridge, MA. English, English abstracts., 7 (2006), pp. 247--282.

Abstract

Finding non-Gaussian components of high-dimensional data is an important preprocessing step for efficient information processing. This article proposes a new em linear method to identify the “non-Gaussian subspace” within a very general semi-parametric framework. Our proposed method, called NGCA (Non-Gaussian Component Analysis), is essentially based on the fact that we can construct a linear operator which, to any arbitrary nonlinear (smooth) function, associates a vector which belongs to the low dimensional non-Gaussian target subspace up to an estimation error. By applying this operator to a family of different nonlinear functions, one obtains a family of different vectors lying in a vicinity of the target space. As a final step, the target space itself is estimated by applying PCA to this family of vectors. We show that this procedure is consistent in the sense that the estimaton error tends to zero at a parametric rate, uniformly over the family. Numerical examples demonstrate the usefulness of our method. -

H. Gajewski, J.A. Griepentrog, A descent method for the free energy of multicomponent systems, Discrete and Continuous Dynamical Systems, 15 (2006), pp. 505--528.

-

A. Goldenshluger, V. Spokoiny, Recovering convex edges of image from noisy tomographic data, IEEE Transactions on Information Theory, 52 (2006), pp. 1322--1334.

-

J. Polzehl, V. Spokoiny, Propagation-separation approach for local likelihood estimation, Probability Theory and Related Fields, 135 (2006), pp. 335--362.

Abstract

The paper presents a unified approach to local likelihood estimation for a broad class of nonparametric models, including, e.g., regression, density, Poisson and binary response models. The method extends the adaptive weights smoothing (AWS) procedure introduced by the authors [Adaptive weights smoothing with applications to image sequentation. J. R. Stat. Soc., Ser. B 62, 335-354 (2000)] in the context of image denoising. The main idea of the method is to describe a greatest possible local neighborhood of every design point in which the local parametric assumption is justified by the data. The method is especially powerful for model functions having large homogeneous regions and sharp discontinuities. The performance of the proposed procedure is illustrated by numerical examples for density estimation and classification. We also establish some remarkable theoretical non-asymptotic results on properties of the new algorithm. This includes the “propagation” property which particularly yields the root-$n$ consistency of the resulting estimate in the homogeneous case. We also state an “oracle” result which implies rate optimality of the estimate under usual smoothness conditions and a “separation” result which explains the sensitivity of the method to structural changes. -

J. Griepentrog, On the unique solvability of a nonlocal phase separation problem for multicomponent systems, Banach Center Publications, 66 (2004), pp. 153-164.

-

A. Goldenshluger, V. Spokoiny, On the shape-from-moments problem and recovering edges from noisy Radon data, Probability Theory and Related Fields, 128 (2004), pp. 123--140.

-

J. Polzehl, V. Spokoiny, Image denoising: Pointwise adaptive approach, The Annals of Statistics, 31 (2003), pp. 30--57.

Abstract

A new method of pointwise adaptation has been proposed and studied in Spokoiny (1998) in context of estimation of piecewise smooth univariate functions. The present paper extends that method to estimation of bivariate grey-scale images composed of large homogeneous regions with smooth edges and observed with noise on a gridded design. The proposed estimator $, hatf(x) ,$ at a point $, x ,$ is simply the average of observations over a window $, hatU(x) ,$ selected in a data-driven way. The theoretical properties of the procedure are studied for the case of piecewise constant images. We present a nonasymptotic bound for the accuracy of estimation at a specific grid point $, x ,$ as a function of the number of pixel $n$, of the distance from the point of estimation to the closest boundary and of smoothness properties and orientation of this boundary. It is also shown that the proposed method provides a near optimal rate of estimation near edges and inside homogeneous regions. We briefly discuss algorithmic aspects and the complexity of the procedure. The numerical examples demonstrate a reasonable performance of the method and they are in agreement with the theoretical issues. An example from satellite (SAR) imaging illustrates the applicability of the method. -

J. Polzehl, V. Spokoiny, Functional and dynamic Magnetic Resonance Imaging using vector adaptive weights smoothing, Journal of the Royal Statistical Society. Series C. Applied Statistics, 50 (2001), pp. 485--501.

Abstract

We consider the problem of statistical inference for functional and dynamic Magnetic Resonance Imaging (MRI). A new approach is proposed which extends the adaptive weights smoothing (AWS) procedure from Polzehl and Spokoiny (2000) originally designed for image denoising. We demonstrate how the AWS method can be applied for time series of images, which typically occur in functional and dynamic MRI. It is shown how signal detection in functional MRI and analysis of dynamic MRI can benefit from spatially adaptive smoothing. The performance of the procedure is illustrated using real and simulated data. -

J. Polzehl, V. Spokoiny, Adaptive Weights Smoothing with applications to image restoration, Journal of the Royal Statistical Society. Series B. Statistical Methodology, 62 (2000), pp. 335--354.

Abstract

We propose a new method of nonparametric estimation which is based on locally constant smoothing with an adaptive choice of weights for every pair of data-points. Some theoretical properties of the procedure are investigated. Then we demonstrate the performance of the method on some simulated univariate and bivariate examples and compare it with other nonparametric methods. Finally we discuss applications of this procedure to magnetic resonance and satellite imaging.

Beiträge zu Sammelwerken

Beiträge zu Sammelwerken

-

E. Gorbunov, A. Sadiev, M. Danilova, S. Horváth, G. Gidel, P. Dvurechensky, A. Gasnikov, P. Richtárik, High-probability convergence for composite and distributed stochastic minimization and variational inequalities with heavy-tailed noise, in: International Conference on Machine Learning, 21--27 July 2024, Vienna, Austria, R. Salakhutdinov, Z. Kolter, K. Heller, A. Weller, N. Oliver, J. Scarlett, F. Berkenkamp, eds., 235 of Proceedings of Machine Learning Research, 2024, pp. 15951--16070.

-

P. Dvurechensky, M. Staudigl, Barrier algorithms for constrained non-convex optimization, in: International Conference on Machine Learning, 21--27 July 2024, Vienna, Austria, R. Salakhutdinov, Z. Kolter, K. Heller, A. Weller, N. Oliver, J. Scarlett, F. Berkenkamp, eds., 235 of Proceedings of Machine Learning Research, 2024, pp. 12190--12214.

-

A.H. Erhardt, K. Tsaneva-Atanasova, G.T. Lines, E.A. Martens, Editorial: Dynamical systems, PDEs and networks for biomedical applications: Mathematical modeling, analysis and simulations, 10 of Front. Phys., Sec. Statistical and Computational Physics, Frontiers, Lausanne, Switzerland, 2023, pp. 01--03, DOI 10.3389/fphy.2022.1101756 .

-

S. Abdurakhmon, M. Danilova, E. Gorbunov, S. Horvath, G. Gauthier, P. Dvurechensky, P. Richtarik, High-probability bounds for stochastic optimization and variational inequalities: The case of unbounded variance, in: Proceedings of the 40th International Conference on Machine Learning, A. Krause, E. Brunskill, K. Cho, B. Engelhardt, S. Sabato, J. Scarlett, eds., 202 of Proceedings of Machine Learning Research, 2023, pp. 29563--29648.

-

A. Beznosikov, P. Dvurechensky, A. Koloskova, V. Samokhin, S.U. Stich, A. Gasnikov, Decentralized local stochastic extra-gradient for variational inequalities, in: Advances in Neural Information Processing Systems 35 (NeurIPS 2022), S. Kojeyo, S. Mohamed, A. Argawal, D. Belgrave, K. Cho, A. Oh, eds., 2022, pp. 38116--38133.

-

E. Gorbunov, M. Danilova, D. Dobre, P. Dvurechensky, A. Gasnikov, G. Gidel, Clipped stochastic methods for variational inequalities with heavy-tailed noise, in: Advances in Neural Information Processing Systems 35 (NeurIPS 2022), S. Koyejo, S. Mohamed, A. Agarwal, D. Belgrave, K. Cho, A. Oh, eds., 2022, pp. 31319--31332.

-

D. Yarmoshik, A. Rogozin, O.O. Khamisov, P. Dvurechensky, A. Gasnikov, Decentralized convex optimization under affine constraints for power systems control, in: Mathematical Optimization Theory and Operations Research. MOTOR 2022, P. Pardalos, M. Khachay, V. Mazalov, eds., 13367 of Lecture Notes in Computer Science, Springer, Cham, 2022, pp. 62--75, DOI 10.1007/978-3-031-09607-5_5 .

-

A. Agafonov, P. Dvurechensky, G. Scutari, A. Gasnikov, D. Kamzolov, A. Lukashevich, A. Daneshmand, An accelerated second-order method for distributed stochastic optimization, in: 60th IEEE Conference on Decision and Control (CDC), IEEE, 2021, pp. 2407--2413, DOI 10.1109/CDC45484.2021.9683400 .

-

A. Daneshmand, G. Scutari, P. Dvurechensky, A. Gasnikov, Newton method over networks is fast up to the statistical precision, in: Proceedings of the 38th International Conference on Machine Learning, 139 of Proceedings of Machine Learning Research, 2021, pp. 2398--2409.

-

E. Gladin, A. Sadiev, A. Gasnikov, P. Dvurechensky, A. Beznosikov, M. Alkousa, Solving smooth min-min and min-max problems by mixed oracle algorithms, in: Mathematical Optimization Theory and Operations Research: Recent Trends, A. Strekalovsky, Y. Kochetov, T. Gruzdeva, A. Orlov , eds., 1476 of Communications in Computer and Information Science book series (CCIS), Springer International Publishing, Basel, 2021, pp. 19--40, DOI 10.1007/978-3-030-86433-0_2 .

Abstract

In this paper, we consider two types of problems that have some similarity in their structure, namely, min-min problems and min-max saddle-point problems. Our approach is based on considering the outer minimization problem as a minimization problem with an inexact oracle. This inexact oracle is calculated via an inexact solution of the inner problem, which is either minimization or maximization problem. Our main assumption is that the available oracle is mixed: it is only possible to evaluate the gradient w.r.t. the outer block of variables which corresponds to the outer minimization problem, whereas for the inner problem, only zeroth-order oracle is available. To solve the inner problem, we use the accelerated gradient-free method with zeroth-order oracle. To solve the outer problem, we use either an inexact variant of Vaidya's cutting-plane method or a variant of the accelerated gradient method. As a result, we propose a framework that leads to non-asymptotic complexity bounds for both min-min and min-max problems. Moreover, we estimate separately the number of first- and zeroth-order oracle calls, which are sufficient to reach any desired accuracy. -

S. Guminov, P. Dvurechensky, N. Tupitsa, A. Gasnikov, On a combination of alternating minimization and Nesterov's momentum, in: Proceedings of the 38th International Conference on Machine Learning, 139 of Proceedings of Machine Learning Research, 2021, pp. 3886--3898.

Abstract

Alternating minimization (AM) optimization algorithms have been known for a long time and are of importance in machine learning problems, among which we are mostly motivated by approximating optimal transport distances. AM algorithms assume that the decision variable is divided into several blocks and minimization in each block can be done explicitly or cheaply with high accuracy. The ubiquitous Sinkhorn's algorithm can be seen as an alternating minimization algorithm for the dual to the entropy-regularized optimal transport problem. We introduce an accelerated alternating minimization method with a $1/k^2$ convergence rate, where $k$ is the iteration counter. This improves over known bound $1/k$ for general AM methods and for the Sinkhorn's algorithm. Moreover, our algorithm converges faster than gradient-type methods in practice as it is free of the choice of the step-size and is adaptive to the local smoothness of the problem. We show that the proposed method is primal-dual, meaning that if we apply it to a dual problem, we can reconstruct the solution of the primal problem with the same convergence rate. We apply our method to the entropy regularized optimal transport problem and show experimentally, that it outperforms Sinkhorn's algorithm. -

A. Rogozin, M. Bochko, P. Dvurechensky, A. Gasnikov, V. Lukoshkin, An accelerated method for decentralized distributed stochastic optimization over time-varying graphs, in: 2021 IEEE 60th Annual Conference on Decision and Control (CDC), IEEE, 2021, pp. 3367--3373, DOI 10.1109/CDC45484.2021.9683400 .

-